rsc's Diary: ELC-E 2017 - Day 2

The second day of ELC-E is over. This is my report.

Safety Critical Linux

Lukas Bulwahn reported in the first talk I heard on tuesday morning about the current state of the OSADL SIL2LinuxMP project. It deals with the question if a Linux based system can be used for safety critical systems, as needed for autonomous drive. IEC61501 asks the question how to make sure there are no "inacceptable risks", and this project is about finding the answer.

To fulfill the requirements of SIL2, there are several architectural options:

- Check the output of a system with a small safety hardware

- Safety Hpyervisor

- Use the Linux kernel to isolate critical and non-critical parts of the system

In the third variant, parts of the Linux kernel need to be certified; this is the variant that is investigated in the project. The challenges are not small: the participants of the project try to find a process to qualify a kernel with 23 million lines of code and a community driven development process. The speaker reported that, when he presents this project in the safety community, half of the audience leaves the room...

One of the biggest conceptional challenges is that safety software is usually developed from day one with a safety process in mind. As this is not the case with Linux, the kernel development processes are compared with the required safety processes and identified gaps are closed with own mechanisms. One example is that they investigated the open() syscall for safety impact ("Hazard Driven Decomposition, Design & Development"). As part of this overall topic, the project also develops a small init replacement.

Another activity of the project is to analyze git commits in the kernel repository for certain factors. The "patch impact tester" analyzes, which config options of the kernel a certain patch has influence on. The project also analyzes a minimal kernel patch set. One (IMHO not necessarily right) assumption is that, in a longterm kernel, the number of bugs found over time decreases (I'm wondering if this could have the reason that nobody in the community is interested in old kernels), so they prefer old kernels over newer ones. On the bright side, a set of analysis tools is developed that will also have a positive impact on the non-safety aspects of kernel quality.

Industrial Grade Open Source Base Layer Development

The 2nd talk was about the "Civil Infrastructure Platform" (CIP). CIP wants to be a base operating system infrastructure for components like trains, ticketing systems, energy distribution, industrial control systems, building automation and medical technology. All those systems have in common that they need to be maintained for a very long time, which also includes providing security fixes. For example, there are train control systems on the market which are in operation for more than 50 years. These kind of systems have typically a development cycle of several years, until all development- and certification steps have been finished. The idea of the project is to have a super longterm kernel (4.4) and other components such as Debian being supported for more than 10 years.

The talk didn't disturb my concern that CIP follows a fundamentally wrong strategy. One of the advantages of Linux is that reasonably modern kernels get a lot of test coverage and community feedback and thus bugfixes, while such old kernels as proposed by CIP are not really interesting for anyone anymore. So while there are industry people who want to have them, there is almost nobody anymore who is interested in developing and maintaining them. Additionally, we have often seen that customers want stability and new features at the same time, so people start backporting patches and patches over the time, which doesn't make things better in any way and ends up in maintenance hell. The only practicable way (in my opinion) is to have proper update systems and follow kernels which are still in upstream maintenance (see Jan Lübbe's talk Long Term Maintenance, or: How to (Mis-)Manage Embedded Systems for 10+ Years from ELC-E 2016).

Opensource in Neuroimaging

After lunch break, Ben Dooks reported about using Open Source to find out what the 1.2 kg mass in his head does. While many methods to visualize the brain are still not within the reach of amateurs, some new options arose recently: there are for instance creative commons licensed databases with anonymized measurement data.

Another possibility to measure the brain is to sense the electrical communication between neurons (EEG). The relevant voltages are in the uV range, with frequencies of 1-100 Hz, so this kind of information can safely be measured. There is a kickstarter funded open source sensor (OpenBCI) with 16 channels available, which can either be built with a 3D printer or bought from a manufacturer. The setup can measure brain waves and visualize, which area of the brain the information comes from. Additionally, there are other open source relevant devices (i.e. Brainstorm, OpenEEG, HackEEG).

Another variant is to measure magnetic fields (MEG); the signals are in the 10 fT range. The method is able to produce better images, but due to the low signal strength it is sensitive to noise. Ben has worked on a measurement product making use of this mechanism. The control system consists of several nodes, measuring up to 600 channels with 24 bit resolution, and the data can be stored and visualized in realtme. The system is based on debian, stores the data in HDF5 format and makes intensive use of OpenGL and Python (NumPY, Arrow, HSPy), while doing time sensitive tasks within an FPGA. He tried to use open source mechanisms on the FPGA side as well, but this area stays complicated with regard to open tools.

UBIFS Security Features

Richard Weinberger reported about security features on NAND chips, a topic which is also interesting for our current work. After a short introduction of the available encryption mechanisms in Linux he talked about which ones could be used on NAND flash.

One interesting variant is fscrypt, a successor of eCryptFS, which has filesystem integrated encryption (see fs/crypto in the kernel tree). It is possible to specify a policy for each node in the directory tree. The keys are handled with the in-kernel keystore. Files the user doesn't have a key for are represented with a base 64 variant of the filename. Tools for fscrypt are still being worked on but will be available soon. Richard explained that the implementation to port fscrypt to UBIFS was a bigger challenge than initially expected, but in the meantime it is available if you switch on the kernel symbol CONFIG_UBIFS_FS_ENCRYPTION.

Another interesting feature would be to ensure file integrity on UBIFS. Although there is IMA (the Integrity Measurement Architecture) for this functionality, it is not implemented for ubifs in mainline, and options to provide it are currently under discussion. One idea could be to reuse the keys from fscrypt and only have a separate HMAC for the index tree, in order to avoid unnecessary writes and performance penalties. However, this topic will continue to be discussed.

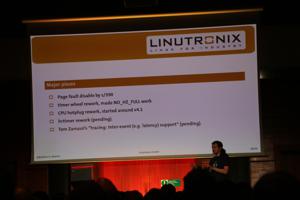

Preempt RT

In my last slot on tuesday, Sebastian Siewior reported about the state of the RT Preempt patch set. The Linux Foundation collaborative project has started in october 2015, and his patch plot shows that during the 3.10-rt cycle, the queue contained more or less a constant amount of patches. When 3.14-rt and 3.18-rt were released, statistics show the beginning of more activities, and with 4.4-rt it was obvious that mainlining activities have accelerated. So it seems that the funding of the project (first by OSADL, now by LF) improved the situation a lot.

Since 4.9, developers have worked intensively on CPU hotplug, around 4.11 the timer wheel was reworked. He showd the current areas of work, but didn't go into the details. Currently, the developers provide a patch set for every second kernel and especially for the LTS kernels.

From an outside point of view, I still find it difficult to judge the progress of the RT project. A short look into the patch queue still showed many backports from upstream and many old patches, and it's unclear why they haven't gone mainline already. Other patches are more "tooling" than RT core features. In former times, the series file contained the status of the patches, but that doesn't seem to be the case any more; it might have moved elsewhere (I didn't follow the activities closely in recent times).

Showcase

Our team showed three open source projects relevant for our recent work during the showcase:

- Etnaviv (Open Source GPU Drivers for i.MX6)

- Labgrid (test automation)

- RAUC (field upgrading)

There were many interesting talks with the audience.

Rouven Czerwinski and Enrico Jörns showing labgrid and RAUC

Michael Grzeschik + Michael Tretter looking at things

Many, many visitors

Rollout of Embedded Linux updates with RAUC and hawkBit

Etnaviv is now in Linux, MESA and Wayland/Weston mainline.

Updating with RAUC

Etnaviv diskussions

Etnaviv diskussions

Further Readings

Pengutronix at SPS in Nuremberg

After some years of absence, Pengutronix is back at the SPS 2025 in Nuremberg. You will find us in hall 6, booth 6-350C. We are looking forward to connecting with old and new friends, partners and customers. As usual, we will be showcasing demonstrators on current topics at our exhibition stand.

GStreamer Conference 2025

This years GStreamer conference was held at the end of Oktober in London, UK. Since GStreamer is our goto-framework for multimedia applications, Michael Olbrich and me were attending this years conference to find out what's new in GStreamer and get in touch with the community.

Talks, Workshops, Time at the Beach - Embedded Recipes 2025

I was part of a small delegation of Pengutronixians at the Embedded Recipes conference this year in Nice, France. We had a great time there, so let's take a look back at the great talks we have seen, the labgrid workshop we held and our time in Nice in general.