OSADL Networking Day 2018

Morning Session

Today, Pengutronix engineers Jan Lübbe, Enrico Jörns and Robert Schwebel joined the OSADL Networking Day in Heidelberg. Here is my report about the morning session with the technical talks.

The morning session was introduced by OSADL chairman Dr. Carsten Emde: This year's main topic is the industrial communication standard OPC UA and TSN (Time-Sensitive Networking). Compared to last year, many more participants from OSADL member companies joined the session, so the audience was expecting many interesting talks and discussions.

The first talk by Stefan Bina (B&R Industrial Automation), "OPC - from proprietary DCOM technology to a universal and versatile network protocol of the future and example application" outlined some history of the OPC protocol: starting in 1996, OPC was used for data access, history, alarms and events. OPC UA is the new generation, released in 2009 and standardized by IEC in 2011. While the first variants were not scalable, Windows-only and had no security and data modeling, the new variant is an object oriented architecture, is independent of the transport method, was designed with security in mind and can be horizontally and vertically scaled.

The lower layers are based on TCP and HTTP, used by "services" such as Read, Write, Browse etc. and an RPC mechanism. The data model contains objects, methods (commands) and events. In terms of footprint, B&R recently developed an IO bus coupler, "Edge Connect", based on a 50 MHz FPGA processor with 800 kB ROM and 700 kB RAM, utilizing 50 variables in 10 ms, 5 parallel sessions and monitoring 300 events. One of the high level ideas is to have a common abstraction for automation things, i.e. robots, across the industry: the information model is supposed to contain all the information automatically. While the new possibilities can be easily used for new machines, there still is a huge base of old machines already installed in the field. For these machines, the Axoom Gate (https://axoom.com/de/) makes it possible to connect them to the cloud. One of the recent developments is to extend OPC UA to the lower layers of the automation stack: in IO communication, devices need a deterministic communication mechanism, mostly in a cyclic way. This is where TSN (Time-Sensitive Networking) comes into play. However, this is an area of active development and will be discussed in more detail in the later sessions. Bina concluded that OPC UA has the potential to unify all the different standards of the automation pyramid into one technology stack.

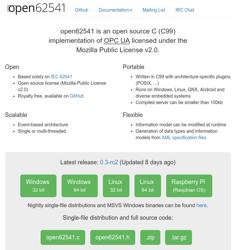

Julius Pfrommer (Fraunhofer IOSB) then talked about "Current status of the open62541 based Open Source implementation of OPC UA Pub/Sub", shading a light on the topic from a bottom-up view. The "normal" OPC UA protocol is request/respond-based and defines 37 standard services used to interact with the information model, i.e. explore the network, call methods etc. He showed a demo of a simulated robot and how the corresponding information model can be explored with the "Unified Automation UaExpert" GUI tool (written in Qt). Changing the values in the tool makes the robot move. Pfrommer then explained the Subscriptions and MonitoredItems mechanisms: MonitoredItems sample attribute values or listen to events emitted by objects, while Subscriptions collect changes in those MonitoredItems and send them out. While one should never write his own crypto, database and network parsing code oneself, the open62541 project has broken all those rules, because someone has to provide the basic infrastructure. The project provides a platform-independent Open Source OPC UA stack, licensed under the MPLv2, written in C99. The project started in 2013 and has about 5.000 commits now. The footprint of a minimal server is about 100 kB; a single core machine is able to handle about 16.000 requests per second.

The official conformance testing tools are used by members of the OPC Foundation, and the codebase passes all conformance tests for the supported features. In addition, the team uses many state of the art quality tools like Travis, whitebox fuzzing etc. There is extensive documentation available. Some time ago it was identified that in addition to the built-in push notifications, a publish/subscribe pattern is required; this makes it possible to have instances that are loosely coupled, just sending publications to a common bus and make it possible for receivers to subscribe to the information they are interested in. Without pub/sub, servers need to send out notifications for any client that is connected; this is resource-hungry, especially if encryption comes into play. The pub/sub mechanism was developed during the last year and has recently be merged to the codebase. A demonstrator has been built in an OSADL organized consortium, using hardware from four different vendors. A second phase of the project is currently being planned.

Yours truly used the time of the talk to quickly try if open62541 cross compiles for embedded systems; this has been a bigger issues for other automation frameworks like ROS in the past (who invented their own make system, not taking care of cross compiling at all); the exercise turned out to be pretty easy, as the project is using CMake. An experimental DistroKit branch can be found at https://git.pengutronix.de/cgit/DistroKit/log/?h=rsc/topic/open62541 .

After the coffee break, Pekka Varis (Tesas Instruments) reported about "Realtime performance of Ethernet driver and Linux networking stack on Sitara AM572x processors". IEEE802.1Q and TSN enables single digit microsecond level jitter for realtime traffic, but we have the situation that most upper layers of today's network concepts are not prepared for this usecase; in result, RTOSes are still used. The device he analyzed is an AM5728, containing a switch; his team measured the times packets need for passing the hardware and software layers, from wire to the application, using the IEEE1588 mechanics built into the device. In most cases, packages need 20...35 µs from the userspace socket based application to MII; while this is fast, it isn't enough for industrial control protocols like ProfiNet. He discussed techniques currently discussed in the Linux community, i.e. the Credit Based Shaper (CBS), or newer capabilities like 802.1Qbv; the latter one seems to show good results for jitter to the wire. His conclusion is that, with a time aware shaper (Qbv), there is a need for making sure the packet is available on the wire when the timeslot opens; typical applications need a minimized latency to the wire. While 200 µs are possible today, it will be necessary to be 1-2 orders or magnitude faster in the future.

Siddharth Ravikumar (Kalycito Infotech) talked about "What is TSN and overview of industrial automation control system traffic types in a TSN network". While time-sensitive mechanisms in standard Ethernet have a longer history, originating from audio video and automotive scenarios, they can provide fieldbus level determinism in packet delivery and timing. TSN adds time awareness to the Ethernet stack: recently discussed topics care about basic switching, support for streams, support for synchronisation, time triggering and reliable communication, while allowing normal communication on the same network. He gave an overview about all of the currently available and discussed standards, which are in fact quite many. The most important one is probably 802.1as, the current variant for precise time synchronisation. The "time aware shaper" technique makes it possible to define several queues for network packets, attaching priorities and an algorithm that controls the "gate" when a queue opens to the wire. Taking care of one device alone isn't enough: all those schedules need to be distributed in the network with NETCONF over TLS (RFC5539). Ravikumar then gave an overview about the different traffic types that happen in industrial setups. He then looked at the platforms they are currently caring: i210, Cyclone V SoC, Zynq, AD fido5000 REM switch (can be attached to the memory bus of an application processor) and TI Sitara PRU. Finally, the hope is that once the device vendors have all those standard techniques ported to their devices, it will be possible to have a much better interoperability than what we ever had in industrial communication. The next step is to perform intensive performance measurements.

Jan Lübbe (Pengutronix) talked about "State of TSN in mainline Linux". His team provides mainline kernel based Linux systems to customers, so he evaluated which TSN techniques are already in the mainline kernel, which are work in progress and which are still needed in the future. There have been several previous projects caring about TSN topics, such as OpenAVB, Henrik Austad's AVB experiments and userspace based (mostly closed) stacks: the problem with these issues is that they are often very device- and vendor-specific, single application and generally difficult to use for best-effort traffic; not what we expect from a high quality Linux stack. For in-kernel mechanisms, tc provides a configuration interface for traffic shaping and scheduling, based on queueing disciplines (qdisc) which can be offloaded to hardware. On the sending side, precise transmit times are important in many usecases; one example is sending packets in predefined time windows. 802.1AS (PTP) support has a longer history in the kernel, many drivers have support to make use of hardware timestamping and offset measuring. On the userspace side, this is supported by linuxptp. The next topic is 802.1Qav (Credit Based Shaper), which was developed by Intel and went into the mainline kernel in 4.15, and is implemented as a tc qdisc. It makes it possible to make sure that bandwidth is not overloaded. Support for i210 is already mainline as well, while for other hardware such as TI CPSW there are already patches on the mailing list. Time Based Packet Transmission is currently under review on the mailing lists: the mechanism queues and sorts the packages and makes sure they are sent out with µs accuracy once their TXTIME is reached. The patch series is at v3, but it seems as if the current userspace interface is to specific, so the design will probably be changed based on the review. For the receive side, the situation is completely different: we want to have low latency for critical traffic, while keeping the possibility to have any other traffic in parallel.

The XDP (eXpress Data Path) mechanism makes it possible to run BPF programs quickly and sorting incoming packages even before SKBs are allocated; it might be an alternative to previously used userspace network stacks. With XDP, a packet can bypass most of the Linux network stack on its way from hardware to the application. Patches are in v4.18-rc1, but the userspace interface is still discussed and might disappear again before the final 4.18 release. Zero-copy mode is also under discussion. There are also ideas around to separate one CPU core for busypoll, so in case you can effort to lose a CPU, all the interrupt latency should be gone. Lübbe then gave an overview about further topics not yet addressed in the kernel. 802.1Qcc (Improved Stream Reservation) will be interesting to configure the network, but there is no open source implementation available right now. Another interesting area is switchdev: Linux gains more and more support to control switches, but nobody has worked on TSN issues there. The talk concluded with a hint to the audience to contribute, try things out and give feedback.

The final slot before lunch by Siddharth Ravikumar (Kalycito) was about "TSN and OPC UA under Linux - how are they connected?". The current goal of the group is to connect two Linux systems using OPC UA and TSN, without any switches (those will be taken care of later) and put certain test loads onto the communication. The test setup shall run in 24×7×365 operation. The first testcase with two systems connects an external oscilloscope to outputs of both systems, to have the time stamping independent of the devices-under-test and with about 20 ns accuracy.

Measurements have shown that ptp reaches +-100 ns accuracy in most cases, but there are some longer latencies: an 8 h test shows a worst case of about 1 µs. The end-to-end test with an DIO line shows a jitter of ±20 µs between the flanks of the two systems; however, tests show that latencies on the same system are in the same range. The aim is to first stabilize on the ±20 µs, then try to improve to about ±1 µs.

Weiterführende Links

Pengutronix auf der SPS in Nürnberg

Nach einigen Jahren Abwesenheit sind wir in diesem Jahr zurück auf der SPS 2025 in Nürnberg! Sie finden uns in Halle 6, Stand 6-350C. Wir freuen uns darauf neue und bekannte Freunde, Partner und Kunden zu treffen. Wie immer zeigen wir Demonstratoren zu aktuellen Themen an unserem Messestand.

GStreamer Conference 2025

This years GStreamer conference was held at the end of Oktober in London, UK. Since GStreamer is our goto-framework for multimedia applications, Michael Olbrich and me were attending this years conference to find out what's new in GStreamer and get in touch with the community.

Talks, Workshops und Zeit am Strand - Die Embedded Recipes 2025

Ich war dieses Jahr Teil einer kleinen Delegation Pengutronixianer, die an der Embedded-Recipes-Konferenz in Nizza, Frankreich teilgenommen haben. Wir hatten eine tolle Zeit in Nizza und wollen jetzt die Gelegenheit nutzen nochmal einen Blick zurück auf unsere Lieblingstalks und unseren labgrid-Workshop zu werfen.

CERT@VDE Innovation Workshop

On June, 27th, while the sun was relentlessly heating up Germany as hardly every before, above 50 employees from many companies came together in a well air-conditioned room in the TP ConferenceCenter in Heidelberg. All operating in different fields of application but all involved in embedded systems and all interested to learn something new about security and deploying software updates.OSADL Networking Day 2017

In the last talk before lunch, my colleague Enrico Jörns talked about the RAUC (Robust Auto Update Controller) framework.