Fixing ICECC, our Workhorse for Building BSPs

While developing operating system infrastructure for industrial devices for our customers, we build lots of embedded Linux board support packages, kernels, bootloaders etc. at Pengutronix. Although ICECC should be a good tool to distribute the computing power to a cluster of machines, it recently turned out that things are not that simple.

Building embedded Linux systems is a time consuming task: in order to make them reproducible, the whole software stack is usually compiled with a build system, for instance PTXdist or Yocto/OE. Even for a medium-sized project, about 100 million lines of code have to be compiled. The advantage is that building the code is an everyday job, well tested and exercised, so everything is under control and in case of issues we know that we are able to instrument the code and find out what goes wrong. However, the downside is that compilation on a single PC needs a lot of time.

At Pengutronix we have already used ICECC for several years now, spreading compile jobs over the available CPUs in the office. Over the years, the teams are growing, CPU and storage bandwidth need to be scaled up, and so we recently got a new dedicated compile cluster. However, when setting up the new hardware, it turned out that ICECC itself had software issues that made the tool less helpful than expected.

The ICECC project was developed at SUSE several years ago, based on distcc. A central server machine – we have one of those in each cluster – runs a scheduler that dynamically distributes compile jobs to the fastest free CPU nodes in the cluster.

Unfortunately, it turned out that the heuristics that decide about which machine to send jobs to showed the age of the code:

- Machines which contained more than 128 MB of free RAM (which is quite common with modern machines) were always considered to have no memory load, which is obviously not true.

- Another problem was that in a cluster with machines of roughly the same speed, the algorithm was fully loading the fastest machine instead of distributing the load equally over the available resources. This led to some machines being overloaded (stepping down from their highest clock speeds and using sibling threads), while others were sitting idle.

- From time to time it happened that ICECC downrated a node due to external influences outside of the control of ICECC; when this happened, a downrated node would never get any new jobs assigned and was thus unable to get a new (possibly better) speed rating calculated until the cluster was loaded heavily enough for jobs to spill over to those "slow" nodes.

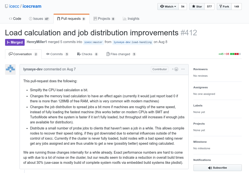

At this year's Pengutronix Technology Week, I took some time and reworked the algorithms to fix those issues. The resulting pull request was merged into ICECC in August 2018.

Exact performance numbers are hard to come up with due to a lot of noise on the cluster, but our results seem to indicate a reduction in overall build times of about 30% for a typical PTXdist based rootfs.

Further Readings

#FlattenTheCurve – Introducing Our Remote Setup

The Corona crisis is a challenge that has hit many people as well as most companies quite unexpectedly. The entire team of Pengutronix wants to thank those that currently ensure our essential supplies, health system and civil infrastructure!